This is Part 3 of a 5-part series on AI in UX Research. If you enjoy this guide, be sure to also check out Part 1 for an overview of how AI can be applied throughout the research process and Part 2 for a deep dive into planning, recruiting, and scheduling.

In Part 2, we established principles for building with AI (model selection, context engineering, iterative development, working with nondeterminism, managing risk) before taking a deep dive into how it can help with the first step of your research process.

Today, we’re tackling possibly the hottest topic in the AI UXR space today—AI moderated interviews. But we’re not stopping there. This guide is about all of the ways you can leverage AI for the crucial step of Data Collection.

Before we dive in, let’s first touch on when you should (and, crucially, when you shouldn’t) use AI for data collection.

To use, or not to use AI moderation

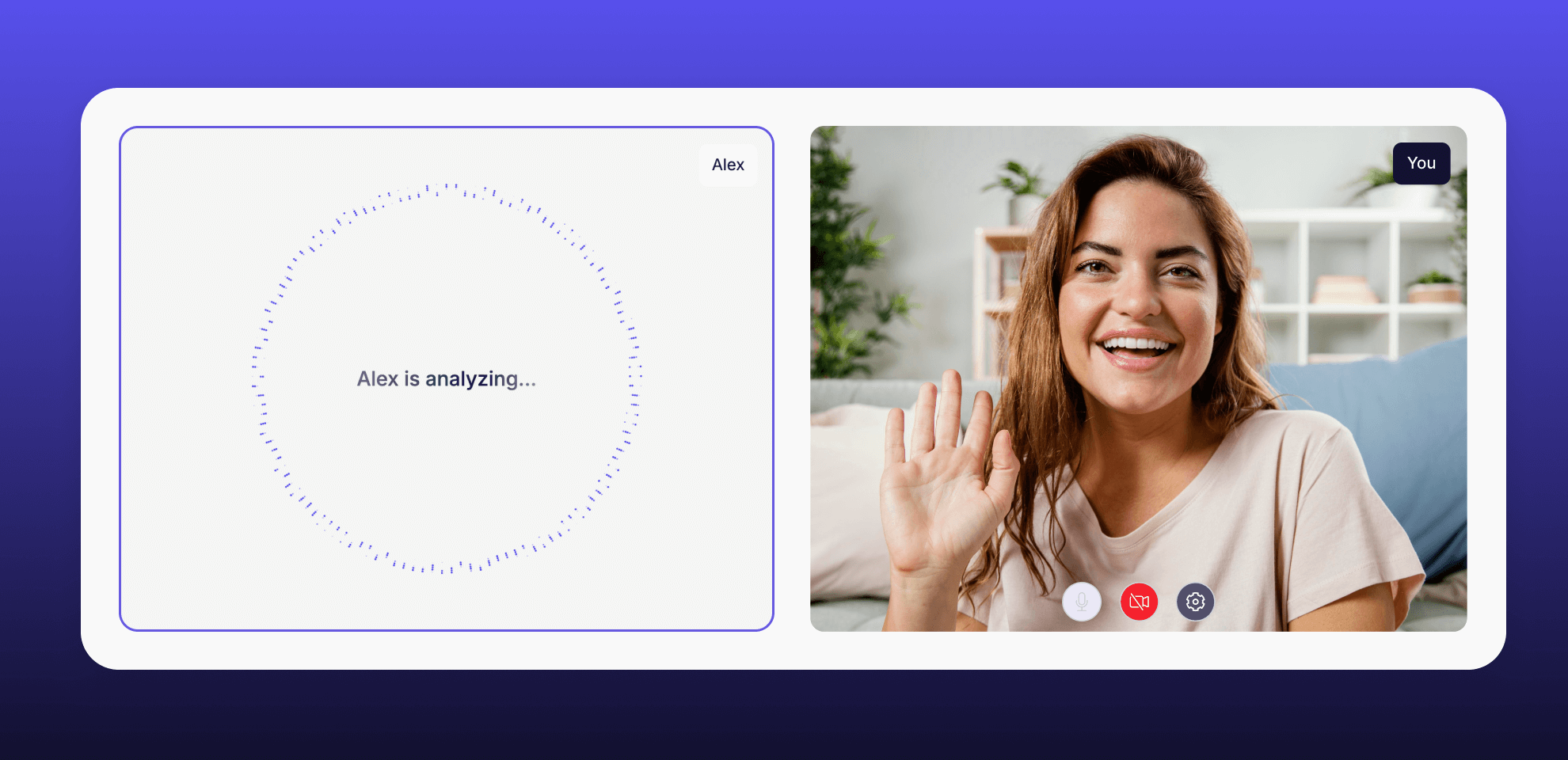

Like it or not, AI-moderated interviews are here and likely to stay. We’ve seen tremendous growth in both the number of options and the sophistication of the models over the past 12 months.

How many tools could you name in the space last year? Companies like Genway, Listen Labs, Strella, and Voicepanel have exploded onto the scene while more established names like Great Question have added AI moderated interviews to it's all-in-one UX research platform.

{{ai-moderated-interviews-cta="/about/ctas"}}

These platforms can now conduct research without a human present, asking questions, probing for detail, and guiding a user through a flow on their own time (and, often, in their own bed). It’s an exciting development, but as Noam Segal notes, the key is balancing the benefits against the risk of getting it wrong.

If you’re wondering what the benefits are, you’re not alone. Here’re a few reasons why AI moderation is compelling:

- Speed & scale. AI can deliver qualitative research at a scale that rivals quantitative methods, running hundreds of sessions simultaneously, 24/7, across time zones. This is invaluable for tactical research or time-sensitive projects.

- Localization. There are over 7,000 languages spoken by humans on Earth, and while tools won’t localize all of them, the top 10 languages cover approximately ⅔ of the world’s population. Most humans speak one language; AI moderators can instantly localize to dozens.

- Simultaneous quant & qual. You can collect both structured data and rich stories in a single session, mixing question types in ways that were either awkward (e.g. asking a quant question during an interview) or cumbersome (e.g. asking someone to type a long story).

- Dynamic follow-ups. Unlike traditional surveys, AI can generate contextually relevant follow-up questions based on what participants actually say, creating a more conversational experience.

- Flexible scheduling. Platforms like Great Question have helped solve major pain points around scheduling research sessions, but there are still limits (working hours, time zones, calendar conflicts, etc). With AI moderation, participants can respond on their own time and from anywhere, which helps boost participation and completion rates.

- Built-in fraud detection. Most AI moderation tools have screening capabilities to identify low-quality or fraudulent responses, cutting down on busywork and wasted time for you. This is one of the most critical things that vendors in our space need to solve for AI moderation to work long-term.

Sounds great, right? It’s not all sunshine and roses, though. As we alluded to previously, there are some concrete reasons why we’re not quite ready to pass everything to our robot overlords (or underlings? 🤔).

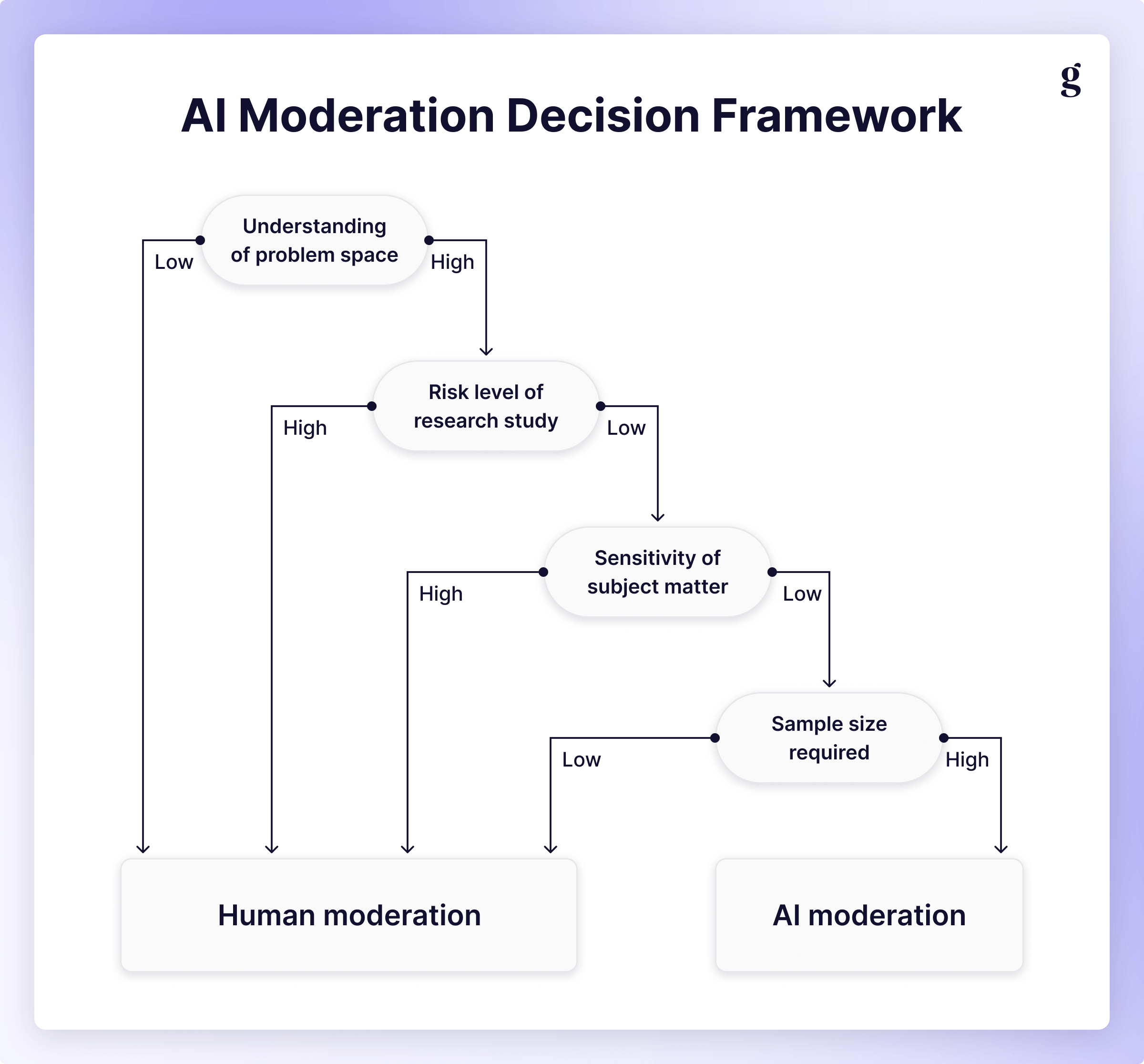

When to use AI moderation

AI moderation shines when you're conducting high-volume research with a consistent focus. If you need to talk to 50 or more participants about a specific topic using standardized questions, AI can execute these interviews efficiently while maintaining quality. This approach is particularly valuable for foundational research, continuous discovery, or validation studies where your primary goal is identifying patterns rather than deep individual exploration.

The tool also excels when you're trying to move beyond anecdotal evidence. Maybe you've seen complaints in support tickets or heard recurring themes from stakeholders, but you need systematic data to validate these hunches. AI moderation can help you collect structured stories at scale, turning scattered signals into solid patterns. Similarly, if you're running usability tests and need to understand where confusion emerges across different user types, AI moderation lets you test flows or features with a large number of participants without requiring dozens of hours from your team.

{{genevieve-james-ai-moderation="/about/components"}}

Geography and timing become non-issues with AI moderation. When your research needs to span multiple time zones or languages, AI moderators can run around the clock. Many tools now offer real-time localization that makes truly global research more accessible than ever before, bringing diverse perspectives into your dataset and strengthening the conclusions you can draw from your research.

Perhaps most surprisingly, participants may sometimes prefer an AI moderator. Anecdotally, researchers have found that most participants say they’d choose AI, especially when they’re given autonomy (though it seems we don’t have anything beyond anecdotal data, though, so if anyone wants to collaborate on that project, please reach out 👀). Some people find it easier to discuss sensitive topics with an AI* or appreciate having time to think through their responses without feeling the social pressure of keeping a human waiting. It’s essential to give participants the choice, though: forcing them to use AI can lead to feelings of resentment (i.e. “If I’m spending my time on this, why aren’t they?”).

*Note: For sensitive subject matters, we still recommend human moderation to prioritize empathy and minimize risk, as shown in our decision tree above. An interesting study though, nonetheless.

A brief aside: AI moderation & democratization

There’s a brewing debate in the industry right now about the role of AI in research democratization overall. While I haven’t taken my own deep dive into specific use cases yet, AI Moderation can be especially powerful and impactful with teams that don’t have dedicated researchers. With proper guardrails, use of an AI moderator can both save time for resource-constrained teams and may actually perform better than someone with little-to-no training. If you’ve struggled to get teams to do their own research in the past, consider how AI might help.

Related read: Great Question's 2025 State of UX Research Democratization Report

When to avoid AI moderation

The flip side matters just as much: knowing when AI moderation is the wrong tool for the job. If you're venturing into completely uncharted territory where you don't yet understand the problem space, human moderators offer something AI can't currently replicate: the ability to sense unexpected directions and pivot the conversation on the fly. AI is reasonably good at asking follow-up questions within a defined framework, but it struggles when entirely new ideas emerge mid-session that deserve exploration, ignoring them in favor of following the script it was given.

{{christopher-monnier-ai-moderation="/about/components"}}

This limitation becomes especially critical when nuance and emotion sit at the heart of your research. Exploring complex emotional experiences, trauma, or highly contextualized decision-making still requires a human moderator's ability to read between the lines, show genuine empathy, and adapt in ways that go beyond programmed responses. AI lacks the full context of your business (no matter how well you think you’ve trained it), your own research intuition, and crucially, the ability to pick up on what participants aren't saying. Trained researchers may be better at it, but we all have decades of experience communicating as humans. Pregnant pauses, word choice, or shifts in tone or body language can speak volumes, and AI just doesn’t pick up on it yet.

Certain situations also demand the human touch for relationship reasons rather than purely methodological ones. Conversations with upset customers or research with high-value enterprise clients who expect white-glove treatment generally warrant human moderation. The absence of human connection doesn't just affect the quality of responses; it can impact participant satisfaction and, by extension, your organization’s relationship with those users. Similarly, if you're conducting co-design sessions, participatory research, or ongoing longitudinal studies, you're building relationships over time that require the trust and rapport only human connection can establish.

{{noam-segal-ai-moderation="/about/components"}}

Finally, consider the efficiency equation carefully. Anything highly exploratory or conceptually complex may require as much or more work up front to prepare the AI moderator as it would take to conduct the research yourself. Instead of spending that time on setup, default back to human moderation, where you’ll get the added payoff of deeper insight that AI moderation can’t currently provide.

Best practices for AI moderated interviews

If you decide AI moderation is right for your project, here are some practices that will help you get the most value:

Design your discussion guide strategically

AI moderation isn't just about handing your usual conversation guide to an algorithm. Keep your guide structured and focused by using clear, simple language (avoid jargon or complex constructions), avoiding “double-barreled” questions (asking about multiple things at once), setting expectations about response length (e.g., "Tell us in a few sentences" or "Tell me a story about..."). Try mixing qualitative and quantitative question types for richer data and to break up the monotony of talking to a machine.

Keep sessions brief

Genevieve recommends aiming for 20-30 minutes maximum. One of the benefits of AI moderation for participants is the convenience factor, so if sessions drag on for too long, quality and completion rate tend to drop off. If you find that you’re struggling to keep the discussion to a reasonable length, it’s another sign this study might be better off moderated by a human.

Manage probing carefully

AI can and will dig in incessantly if you let it, and users will get tired and frustrated if the AI keeps pushing for more detail when there’s none to give. Be explicit about what you want the AI to probe (e.g., "Make sure you get a story about their first experience with the feature") and when they can move on, and limit the number of topics you have the AI moderator follow up on. I’d try to limit it to no more than three per study, especially because the system will likely ask multiple follow-up questions for each topic.

{{johanna-jagow-ai-moderation="/about/components"}}

Be mindful of fraud

Is research fraud is increasing? Or is it just more visible than ever, given advances in technology? Tough to say. Regardless, it's crucial to screen participants rigorously and consider implicit biases, especially when using external panel recruitment tools like Respondent or Prolific. Bring your own participants whenever possible to ensure quality, and in any case, I’d recommend requiring participant video to be on. One of the first things I learned when experimenting with AI moderation is it’s much easier to tell if someone is using an LLM to answer questions with video on; in one instance, we even caught a participant reading from their phone (which Chris has also seen). 🤦

Build trust with disclosure

Always disclose up front how AI will be used for this study, especially if they’ll be speaking with an AI system directly. Give people the ability to choose a human if they want, but even if not, put your name and face behind it so participants realize there's a human in charge. Simply including the researcher’s name in AI disclosures doubled session length and eliminated drop-offs at Glassdoor.

{{athena-petrides-ai-moderation="/about/components"}}

As with all research, you should also communicate to participants how their data will be protected, what it will be used for, whether it’ll be used for training, what their rights are for data governance (e.g. GPDR, CCPA), and how to contact a human if they have any trouble.

Always pilot the study

You wouldn’t ship a new feature without testing it. AI moderation is no different.

Most platforms let you pilot the study yourself before launching it. Do that two or three times to get a feel for how it flows, how it handles follow-up questions, what it does if a participant gets off track, etc. Then run 5-10 sessions and review the results before launching at scale.

Make sure you get a diverse set of perspectives so you can assess a few key questions. Is the pacing right? Does it flow? Are follow-ups working as intended? Are you getting the depth you need? Are users getting frustrated? Finding the right balance is somewhere between an art and a science.

Don’t be afraid to make slight adjustments based on your initial test or on the early feedback you’re getting. Remember: working with AI is an iterative process, and is inherently nondeterministic. You can likely still use some of the data you’ve collected before making adjustments.

Leveraging AI for at any stage in research process is not "set it and forget it." You need to stay involved, monitor recordings as they come back, and be ready to intervene or adjust your approach. This is human-led research with AI assistance, not fully automated research.

{{matt-gallivan-ai-moderation="/about/components"}}

Beyond AI moderation

AI moderation is all rage and it's easy to see why. However, focusing solely on this research method for data collection is like buying a Swiss Army knife for the bottle opener. There's so much more happening in this space, from personalized prototypes to novel stimuli and methodologies. AI is pushing the boundaries and creating new ways to gather data from our participants.

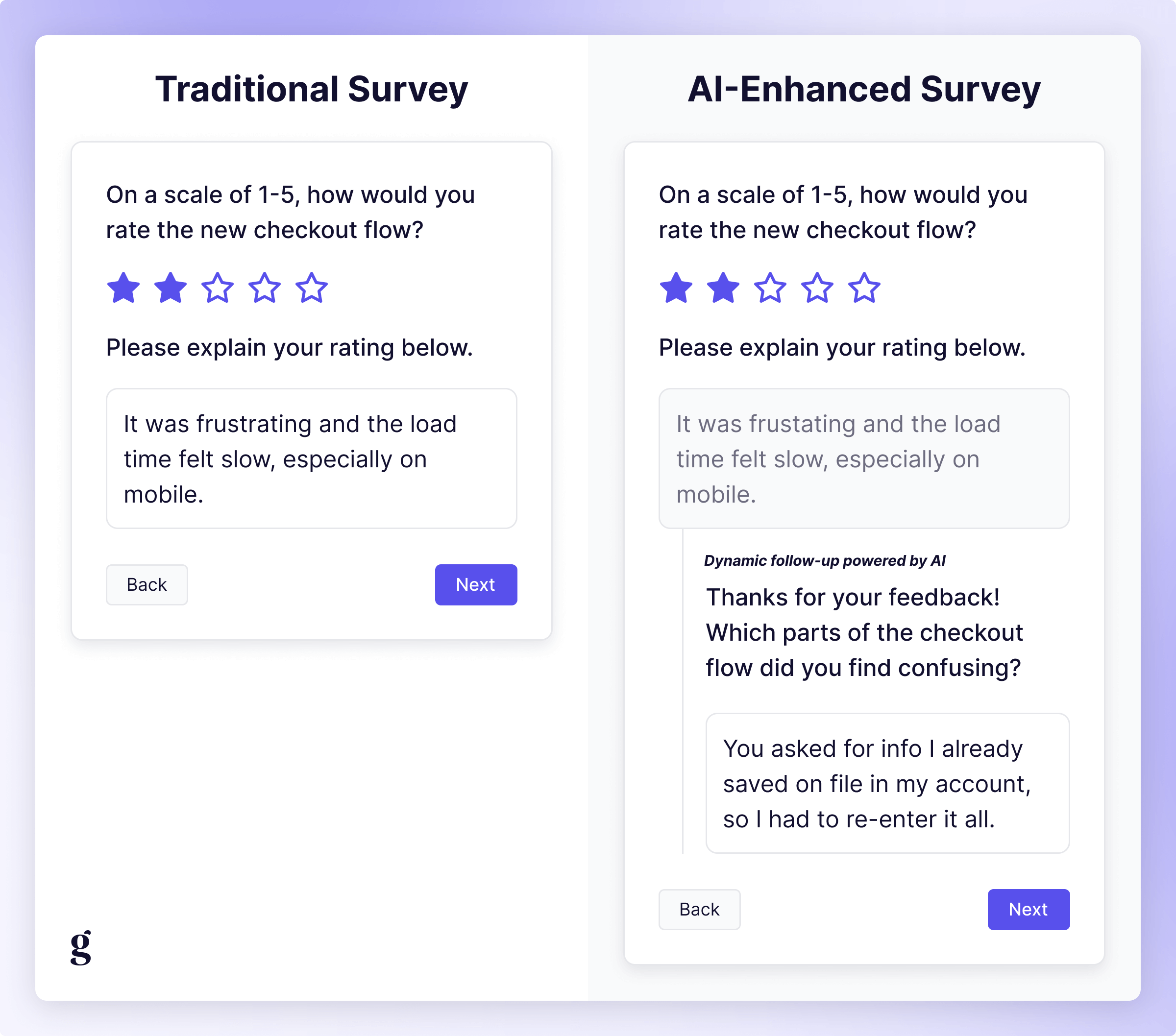

Enhanced surveys: Intelligent, adaptive & responsive

Some AI moderation companies are marketing their products as "smart surveys," and while that might sound like marketing speak, there's actually something to that. Surveys are beginning to evolve from static questionnaires into adaptive, intelligent conversations.

Take intelligent follow-ups, arguably the most compelling application here. Imagine a survey that asks someone "What frustrated you most about the onboarding process?" and then automatically generates a follow-up question based on their response. If they mention confusion about pricing, the next question probes into what specifically was unclear. If they mention technical issues, it asks about their setup and browser. It's like branching logic on steroids; instead of trying to predict every possible response, your survey can dynamically adapt to what's being said.

This extends to vague or short answers as well. If someone writes "it's fine" in response to an open-ended question, the survey can prompt them: "Could you tell me a bit more about what 'fine' means to you in this context?" It's addressing one of the biggest weaknesses in surveying: the lack of depth and richness we struggle with when analyzing responses later.

Real-time localization is another area where AI is making surveys genuinely more accessible. Just like with AI Moderation, tools can now translate questions to dozens of languages, reaching a much wider set of participants. While it might not completely replace human translators when it comes to cultural context or idiomatic nuance, if the alternative is only surveying in English, this vastly improves the participant experience.

Here's what's interesting: similar to how the lines between Product, Design, Engineering, and Research are getting fuzzy, the line between traditionally qualitative and quantitative methodologies is blurring. We're moving toward a middle ground where you can get some of the depth of interviews with some of the scale of surveys. Will it completely replace either? I don’t think so. But it opens up new possibilities for designing research studies that combine efficiency with nuance.

Stimulus generation: Personalization at scale

One of the most practical applications of AI in data collection is generating stimuli—the materials you show participants during research. This might be the area where AI provides the most immediate value with the least risk.

Instead of showing every participant the same generic, static mockup, you can generate personalized versions tailored to their role, industry, use case, or proficiency level. Testing a project management tool? Generate examples that use terminology, workflows, and even data relevant to each participant's primary role. This increased realism makes it easier for participants to interact with authentically, improving their evaluation of whether something works for them. You can also show novice users simpler interfaces with more guidance while expert users see more complex versions with advanced features exposed. This helps you understand how your product needs to work across the expertise spectrum without requiring your design team to generate dozens of separate prototype versions.

Need to test the same concept across ten different markets? AI can adapt color palettes, imagery, examples, scenarios, and even interaction patterns to local expectations all while maintaining your brand's standards. This would take days of work previously, requiring specialized skillsets that most teams simply didn't have access to. Now it's doable in an afternoon.

As AI continues to evolve and integrate itself into every facet of work in tech, we're entering a world where every individual user might have a slightly different version of the product experience based on their preferences, needs, and usage patterns. Nondeterministic Design is an emerging field, and as Jakob Nielsen notes, it can create real challenges for Researchers (and for Support staff, too). Instead of testing a small set of static designs, you can leverage AI to test a variety of different layouts, interaction patterns, colors, or word choices. This is essential if you're building anything that uses AI as part of the product experience.

The key benefit here is that AI-generated stimuli removes the constraint of "we can only test what we can build." You can explore more concepts, test more variations, and personalize more deeply without dramatically increasing the time investment from your design or engineering teams.

{{research-maturity-framework="/about/components"}}

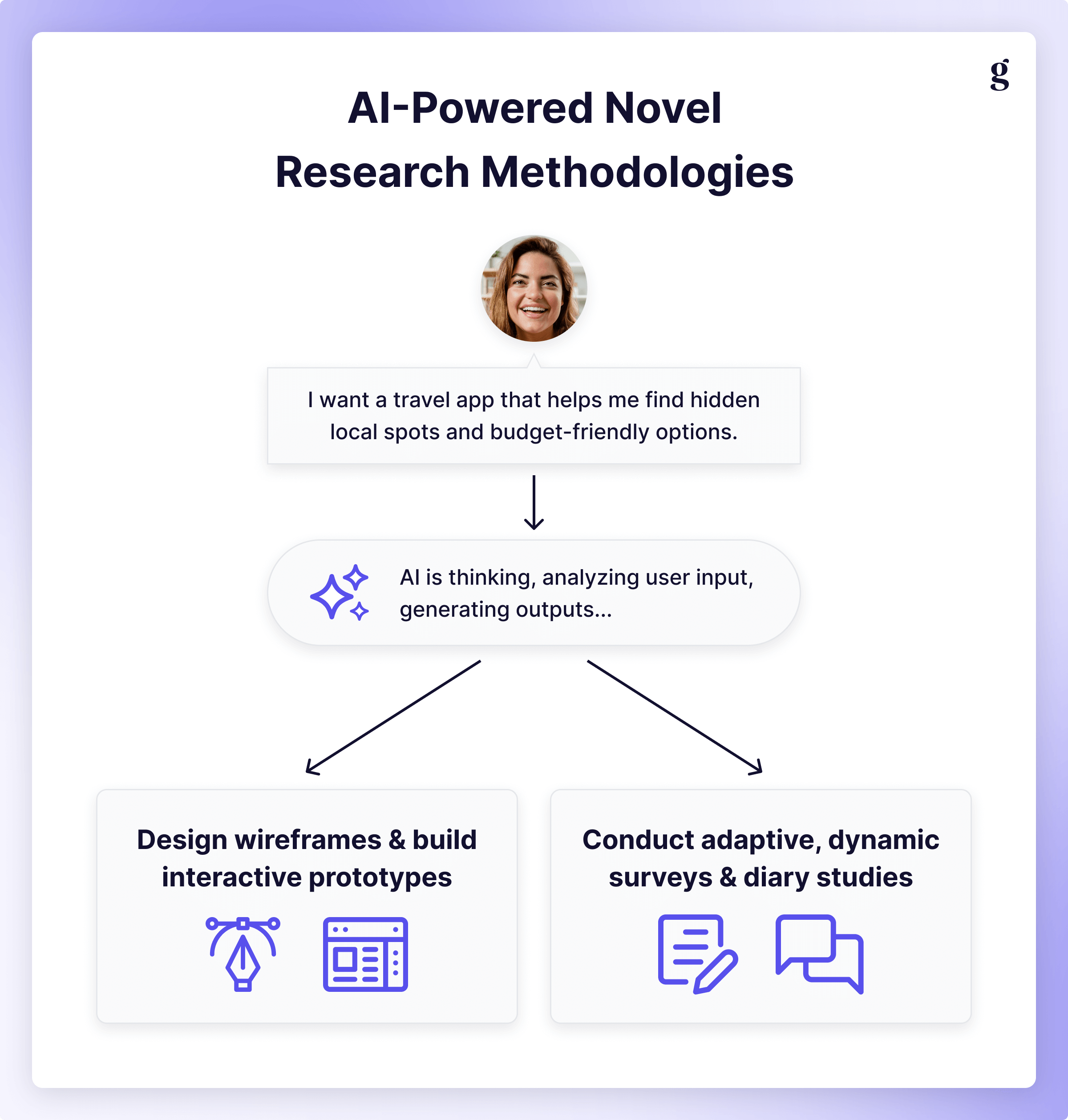

Novel research methodologies: Pushing the boundaries

On top of enhancing existing methodologies, AI both enables entirely new approaches that weren’t practical before and helps us react to the shifting landscape of product design. Here are some emerging methods that AI is making possible.

What if you could run a hybrid interview and concept test in a single session? Picture this: you conduct a traditional interview with a participant about their ideal travel planning experience. Halfway through, you pivot to concept testing where an AI agent generates a personalized mockup or storyboard integrating elements the participant just discussed. By training a custom GPT or agent on the feature sets you're considering, you can have AI generate a few variants based on what's been described as the key pain points and get immediate feedback on those potential features.

Due to Chatham House Rules, I can’t give credit where it’s due, but a colleague recently described this exact experience: by training Claude to create narratives based on different feature sets they were considering, they were able to get feedback from participants that was highly relevant to their specific use cases. What they ultimately found is that users cherry-picked elements of each story that they liked, making a compelling case to adapt the product roadmap to align with customer needs. It's a powerful way to unlock both validation and direction in a single session.

Diary studies present another opportunity. While traditional diary studies ask participants to log their experiences at set intervals, AI can enhance this method by adjusting prompts based on what participants have already shared, identifying patterns in their entries, and asking targeted follow-up questions about specific incidents. If someone mentions stress three times in a week, the diary might automatically ask them to reflect on common factors. This creates a more responsive, personalized research experience while maintaining the longitudinal benefits of diary studies.

Operationalizing this at scale might be difficult right now (I'm unaware of any tools that support this out of the box…though stay tuned, I might have something soon 😉) but you can solve many of the pain points involved in running a diary study by leveraging AI, even if it means a certain amount of copy and paste from your preferred LLM or getting your hands somewhat dirty with automation tools like n8n or Lindy.

As interfaces diversify beyond traditional screens, AI is enabling research on emerging modalities such as conversational/chat-based UX, voice user interfaces (VUI), and extended reality (XR) in ways that weren't feasible before. You can leverage an AI Voice agent (Twilio, ChatGPT, Gemini, etc) to prototype which phrasing, tone, and flow work best, or work alongside your engineering team to create XR environments that adapt to participant behavior in real-time.

These novel methodologies share a common thread: they use AI to create dynamic, responsive research experiences that adapt to participants while preserving the human insight and interpretation that makes research valuable. I hate to use the term “superpower”, but… 🙃

The future state of research data collection

While the myriad ways in which we can leverage AI currently are already impressive, things get even wilder when we start thinking about the future. We’re not far off from some of these use cases—especially if we consider the growth of agentic technologies such as Model Context Protocol (MCP)—and I for one, am incredibly excited about how these capabilities will change how we work.

How many times have you heard a request for Research to be more proactive, or heard research’s role described as “seeing around corners”? Imagine an AI agent that continuously monitors your Voice of Customer (VoC) data, social media mentions, support tickets, and community forums. When it identifies an emerging theme or shift in sentiment, it automatically generates and deploys a targeted survey to your panel. The survey adapts based on early responses, drilling into the most interesting or unexpected findings, proactively delivering continuous, responsive pulse checks without manual survey management.

On top of that, we’re not far off from enabling In-Product AI Moderation: if a user struggles with a feature (e.g. spending 30 seconds rageclicking), an AI assistant gracefully appears in-product to ask a few contextual questions, record the user’s screen, and possibly suggest help articles/resources right at the moment of friction, simultaneously marrying contextual data with qualitative depth and potentially solving their problem. Tools like Sprig excel at in-product surveys, getting feedback directly from users at the point of interaction, and we’ve already written at length about AI moderation. In the future, we can bring these two together, combining their benefits to both create a seamless experience but also to help Product and Support teams serve customers better.

Perhaps the most impactful augmentation could be the Moderator’s Copilot. This isn't a replacement; it's support. An AI assistant could join your moderated sessions and provide real-time, private feedback. For example, it might nudge you: "You've been talking for the last 2 minutes. Let the participant speak." It could also identify opportunities: "The participant has mentioned 'collaboration' three times. That seems important to dig into," or keep you on track by noting, "You haven't touched on the 'pricing' section of your discussion guide yet." This copilot empowers the researcher to focus on the most human parts of the job (empathy, rapport, and strategic thinking) while the AI handles the tactical support. After your session, the copilot can provide feedback on your technique, helping train up those who are newer to research. Tools like BrightHire already exist in the realm of hiring; who will be the first to launch something similar for researchers?

What’s next?

AI is transforming data collection in profound ways, offering new efficiencies, enabling novel methodologies, and opening up possibilities that didn't exist even a year ago. But just like planning, data collection is only one phase of the research process.

Part 4 of our series will explore how AI can help solve one of the problems AI-empowered data collection can create: efficiently and effectively making sense of the piles of data you’ve collected.

The goal with any of these guides isn’t to replace researchers with AI, it's to augment our capabilities so we can focus our time and effort on the deeper, more impactful work at the pace our organizations need. As we all work towards figuring out best practices, I encourage you to continue experimenting with these tools, sharing your knowledge publicly so we can all benefit.

What’s your favorite way AI has made your data collection process better? Comment, tag, or DM Brad on LinkedIn!

.png)