A practical resource for research teams, product leaders, legal, and procurement

Paying research participants isn't optional if you want quality insights on realistic timelines. Research from survey methodology experts shows that appropriate incentives increase participation rates by 8-10 percentage points, reduce no-shows from 20-25% down to 5-10%, and help you recruit participants you'd otherwise miss.

The cost: Expect $60-100/hour for consumer participants, $100-200/hour for professionals. A typical 60-minute interview program with 20 participants costs $1,200-2,000 in incentives. See current market rates →

The compliance angle: Incentives are not only standard practice—they're expected by IRBs and ethics boards when done right. The key is documenting your rationale and ensuring payments compensate time rather than coerce participation.

The operational reality: Modern platforms handle global payments, tax compliance, and fraud prevention automatically. Learn how Great Question manages incentives →

When we don't pay participants appropriately, three things happen. Recruitment takes 2-3x longer than planned, delaying insights that inform roadmap decisions. Sample quality suffers because you only hear from people with extreme opinions or unusual amounts of free time. And no-show rates spike to 20-25%, wasting researcher time and creating schedule chaos.

The research is unequivocal. A comprehensive meta-analysis by Singer & Ye (2013) reviewing studies across mail, phone, and web found that monetary incentives consistently increase response rates by 8-10 percentage points. That's the difference between filling your research calendar in 4 days versus 12 days.

Based on analysis of thousands of studies on the Great Question platform, here's what the numbers tell us:

The math is simple. Spending an extra $400 in incentives ($80 vs $60 for 20 people) saves your researcher 5-9 days of recruiting time and reduces wasted calendar slots by 50%. When you consider researcher compensation ($150-200/day), that extra $400 in incentives saves you $1,500+ in labor costs.

Some teams ask: "Our customers love our product—won't they give us feedback for free?"

Sometimes, yes. But consider the tradeoffs. Selection bias means people who volunteer unpaid time have systematically different characteristics than your actual user base—more time, stronger opinions, different demographics. Reciprocity expectations matter because even passionate users expect professional respect, and payment signals their time has value. And you're facing competition since your users are being recruited by other companies who DO pay.

As Holly Cole, CEO of the ResearchOps community, explains: "If you're not compensating people for their feedback... the feedback that you do get is going to be lower quality, because free information is horribly biased."

When lower or no payment is appropriate: Internal employee research works best when participation is fully optional, during work hours, with manager support—payment can feel weird or create pressure. Professional community research where participants get valuable reports or benchmarks in return may not require full incentives, though a $25-50 token is still appreciated. Beta tester communities where early access has real value can work without payment, but don't abuse this. Even beta testers should get periodic incentives.

Investment: $1,200-2,000 in incentives for a typical 20-participant interview study.

Returns: Prevents $50-200K in development waste on wrong features, reduces time-to-market by weeks (faster recruitment = earlier insights), and improves product-market fit (better sample quality = more representative feedback).

Even a single prevented feature failure pays for years of research incentives.

Ten years ago, $25 Amazon gift cards were standard. Today, that's what we pay for 15-minute unmoderated tests. Why the increase?

Professionalization has changed the landscape; there are now platforms connecting participants to dozens of studies, and people know market rates. Opportunity cost has increased as the gig economy means your $25 competes with Uber, TaskRabbit, and freelance work. And respect matters because lower payments signal lower importance; your product deserves better data. Inflation hasn't done us any favors either.

We regularly survey participants about their experience. The top 3 factors in accepting a study invite are: fair compensation for time (cited by 78%), interesting topic/company (cited by 64%), and convenient timing/location (cited by 51%).

Notice that compensation is #1, but it's not alone. People want to feel their participation matters. The combination of fair pay + clear purpose + professional treatment is what attracts quality participants.

→ Read more: How to choose the right incentive type for your audience

Legal and compliance teams sometimes worry that paying participants will bias their responses or make them "tell you what you want to hear." The research doesn't support this concern.

What matters is how you frame the payment. Bad framing: "We'll pay you $75 for positive feedback about our app." Good framing: "We're compensating you $75 for 60 minutes of your time and honest perspective."

The distinction matters. When payment is framed as compensation for time (like any consulting or freelance work), it doesn't systematically bias responses. When it's framed as payment for specific outcomes, that's where problems emerge.

The academic term for this is "motivation crowding theory" but the practical takeaway is simple: pay for time and expertise, not for opinions.

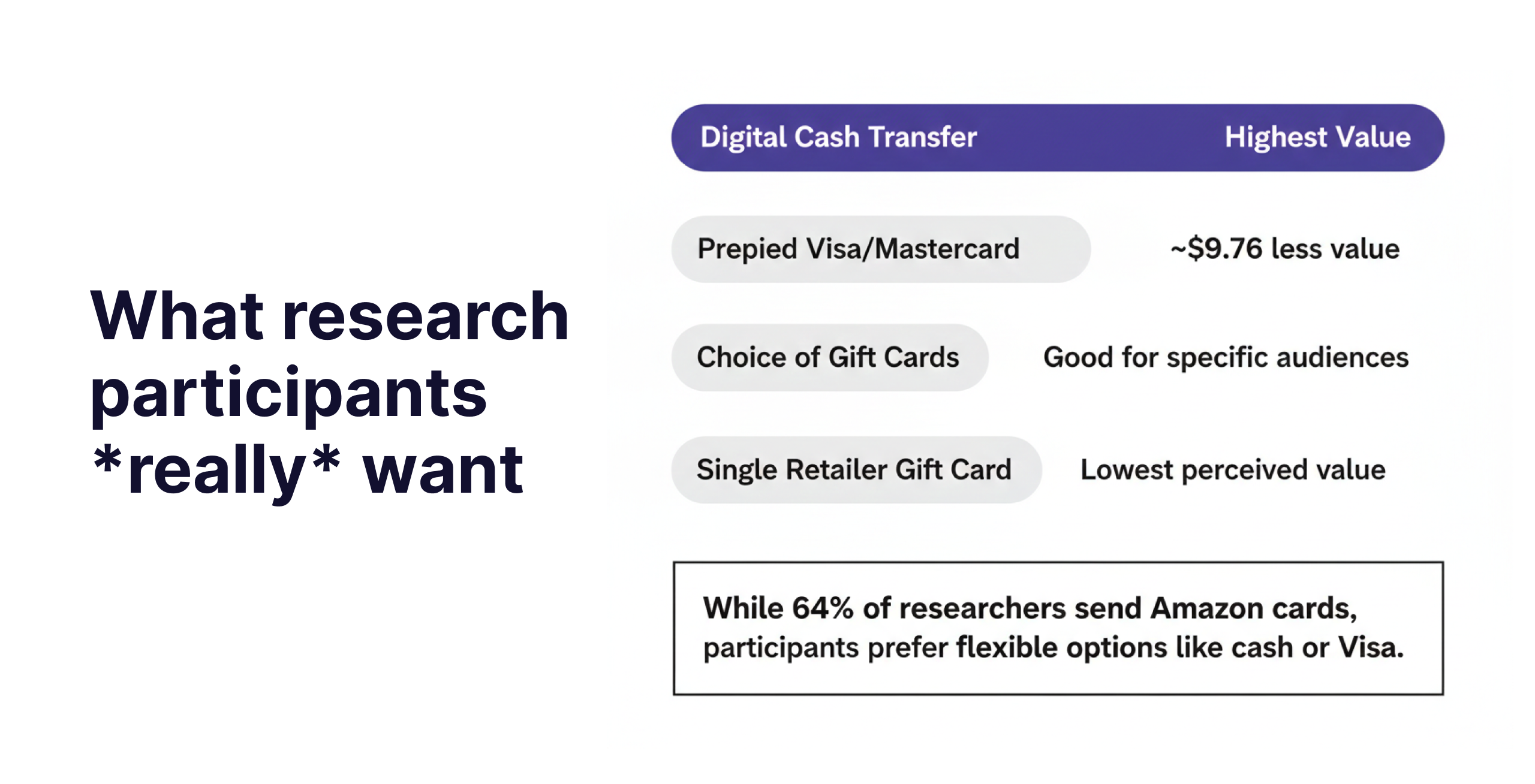

Based on User Interviews' analysis of nearly 20,000 completed studies:

Consumer/B2C Participants (Remote)

Professional/B2B Participants (Remote)

<a href="https://v0-research-incentives-calculator.vercel.app/" target="_blank" style="display:inline-block; background:#2563eb; color:white; padding:12px 24px; border-radius:8px; text-decoration:none; font-weight:500;">Try the Research Incentives Calculator →</a>

Don't just pick a number from the benchmarks above. Work through these factors:

Time commitment: Base calculation is target hourly rate × actual time required. But also consider the minimum payment threshold (even a 10-minute study should pay $10-15), preparation time (if you're asking participants to gather documents or complete pre-work, that counts), and travel time for in-person studies.

Participant difficulty: Use these adjustment multipliers against your base rate.

Example: You need CMOs at B2B SaaS companies who have purchased ABM software in the last 6 months. Base rate for executives is $200/hour, rarity multiplier is 2.5×, so the appropriate rate is $500/hour.

Study burden: Add 10-20% for sharing sensitive information (financial, health, personal), 10-15% for NDA requirements, 15% for requiring download of beta software, 5-10% for video recording faces (vs. screen only), and 20% total for multiple sessions.

Urgency: Need to fill slots in 2-3 days instead of 5-7 days? Add 20-30% to rates. This is standard market practice—participants who can accommodate last-minute scheduling expect premium pay.

Your budget reality: If budget is truly constrained, reduce sample size (12 quality participants beats 20 mediocre ones), use unmoderated methods when appropriate (costs 60-70% less), or extend timeline to accept lower rates. Don't underpay significantly, you'll waste more money on failed recruitment.

While monetary incentives are the default for most research, non-monetary options can be equally or more effective for certain audiences, particularly B2B professionals who may find cash payments transactional or awkward.

Non-monetary incentives work best for:

Effective non-monetary options include: research reports/industry benchmarks (high perceived value for B2B), early feature access (power users, beta communities), account credits/upgrades (existing customers), charitable donations (offer 2-3 charity choices), professional development (courses, conference passes), and peer networking opportunities (exclusive roundtables or community access).

Often the best solution combines monetary and non-monetary incentives. For example: "We'll compensate you $100 for your time, and you'll also receive our annual industry benchmark report ($500 value) before public release." This respects their time while adding differentiated value that cash alone can't provide.

Important: Non-monetary incentives should supplement fair compensation, not replace it. Using "exposure" or "early access" as an excuse to avoid paying participants is a fast path to recruitment problems and sample bias.

This is what legal teams worry about: Are we paying people so much that they'll participate in something risky that they wouldn't otherwise do?

The regulatory framework: U.S. research is governed by 45 CFR 46 (the "Common Rule"). IRBs (Institutional Review Boards) must ensure incentives don't create "undue influence." The HHS Office for Human Research Protections provides guidance.

The practical reality: For standard UX/product research with no physical risk, typical market-rate payments are not undue influence. Undue influence concerns apply to medical research with health risks, research with vulnerable populations (children, prisoners), and studies requiring participants to reveal information that could harm them.

Based on OHRP guidance, here's what makes an incentive plan defensible:

✅ Good practices

Compensation model: Frame as payment for time and expertise, not payment for specific answers. Use hourly rates comparable to unskilled labor ($15-25/hour minimum) with adjustments for expertise. Document your rationale for the amount.

Timing and conditions: Pro-rate payment for partial completion (e.g., if someone completes 30 minutes of a 60-minute interview, pay 50%). Don't require full completion for any payment—this can pressure people to continue when they want to stop. A small completion bonus (10-15% of total) is acceptable.

Transparency: Clearly state in consent documents the payment amount, timing, and conditions. Don't exaggerate the payment in recruitment materials. Explain what happens if participant withdraws.

Special populations: Extra scrutiny for economically disadvantaged, students, and employees. Consider whether the amount is appropriate relative to their financial situation. Document why payment is necessary and not coercive.

❌ Practices to avoid

Payment structures that pressure continuation include all-or-nothing payment (full amount only if complete entire study) and escalating payments that make it hard to quit. Recruitment practices that create pressure include finder's fees, bonus payments to recruiters based on enrollment speed, and targeting only economically vulnerable populations with high payments. Unclear or misleading terms include hidden conditions, vague timing, and bait-and-switch tactics.

U.S. tax rules: Participants who receive $600+ in a calendar year must receive a Form 1099. Your company must collect their Social Security Number and report these payments to the IRS.

How Great Question + Tremendous handles this: Automatically collects W-9 forms when needed, generates 1099s at year-end, and files with IRS on your behalf. Learn more about tax compliance →

International payments: Different rules apply (W-8BEN for non-U.S. individuals). Gift cards often avoid triggering reporting requirements. Consult with tax advisor for large international programs.

What your legal team will want to see:

Many teams start with "we'll just send Amazon gift cards." Here's why that breaks down.

Researcher time consumes 10-15 minutes per payment to purchase, send, track, and follow up. There's no international support since Amazon doesn't work in most countries. It creates a tax nightmare with spreadsheets of who got paid what and manual 1099 creation. There's fraud risk with no verification, making it easy for bad actors to game the system. And it causes participant frustration with delays, wrong emails, and redemption problems.

At 100+ participants per year, you're spending 20-30 hours just on payment logistics.

Great Question's incentive management is powered by Tremendous, providing a fully integrated experience.

Study setup (in Great Question): Define incentive amount and conditions, choose payment method (gift cards, prepaid cards, etc.), and set payment trigger (immediate, manual approval, scheduled). Step-by-step setup guide →

Participant journey: They see the incentive clearly in screener and consent, choose their reward preference (if offering options), and provide tax info if needed (handled automatically).

Payment delivery (via Tremendous): Triggered automatically or manually by researcher, participant receives email with reward within minutes, and can choose from 2,000+ options if you offer flexibility.

Compliance and reporting: All payments tracked in Great Question dashboard, export for accounting/finance, automatic 1099 generation at year-end, tax filing handled by Tremendous. Managing your incentive wallet →

Pricing transparency

Example calculation: 20 participants × $75 = $1,500 in incentives + ~$45 Tremendous fee = $1,545 total. Compare to: Researcher spending 5 hours on manual gift cards at $60/hour = $300 in labor, plus mistakes and delays.

50% of research teams conduct international research. Here's what works:

Best practice: Let participants choose. What works in Seattle doesn't work in São Paulo.

Currency considerations: Don't just convert USD to local currency using exchange rates. Calibrate to local purchasing power—$50 USD goes much further in Manila than San Francisco.

Tremendous handles automatic currency conversion, local reward options by country, and compliance with local regulations. Read more about international incentives →

Standard Rate Card

Adjustment Factors

Approval Workflow

Special Cases

For research leaders presenting to product/executive leadership:

The ask: $X for participant incentives across Y studies.

The business case:

The comparison:

The precedent: Every major tech company pays research participants. This is table stakes for quality insights.

Annual Research Incentive Budget

Add 10% buffer for rush studies and difficult recruitment: $57,178

Cost per insight: With 50 studies generating 500+ hours of conversations, that's ~$114 per research hour, or ~$1,140 per study—a fraction of agency costs.

Recruitment efficiency

Participation quality

Financial metrics: Track cost per completed session, wasted incentive (paid but no-showed, if prepaid), and incentive as % of total research budget (typical: 20-30%).

What good looks like

Q: "Can't we just interview our existing users for free?"

Some will participate without pay, but you'll systematically miss people with less time (busy professionals, parents, hourly workers), people with weaker opinions (the "silent majority"), and people considering alternatives (at-risk customers). The $75 you save per interview costs you thousands in biased insights.

Q: "This seems expensive. How do I know it's worth it?"

Compare to alternatives: agency research runs $50-150K per project, engineering time building wrong features costs $200-500K, while incentives for 50 studies total $50-60K/year. The ROI is 10:1 even if incentives prevent a single major feature failure.

Q: "How do I benchmark if we're paying appropriately?"

Track these metrics: time to fill should be 3-7 days for most studies, no-show rate should be under 10%, and participant quality ratings should average 4.5+/5.0. If these are off, your incentives might be too low.

Q: "How do we ensure this doesn't create undue influence?"

Three key practices: pay market rates for time (not excessive amounts for risk), pro-rate for partial completion (never all-or-nothing), and document rationale in consent forms. Standard UX research with market-rate payments is low-risk for undue influence.

Q: "What documentation do we need?"

Three documents: incentive rationale (why this amount), consent language (clear terms), and payment records (who, when, how much). Great Question generates these automatically.

Q: "Are there people we absolutely can't pay?"

Check for government ethics rules (federal employees often can't accept), healthcare COI policies (depends on context), and your company's gift policies (for competitor employees). When in doubt, consult your legal team. Alternatives exist (charity donations, no payment with strong professional value).

Q: "Why can't we just use our corporate credit card for Amazon gift cards?"

Three problems: it doesn't scale internationally (Amazon doesn't work in 150+ countries), there's no tax compliance (you'll manually generate 1099s for 100+ people), and no fraud prevention (bad actors will game you). At 100+ participants/year, dedicated platforms save money via efficiency.

Q: "What's the total cost of ownership?"

Factor in face value of incentives ($50-75/participant typical), platform fees (~3% transaction fee), researcher time saved (10-15 min/participant, worth $15-25 in labor), and tax compliance (hours saved at year-end, worth $500-1,000). Net: platforms cost ~3% more in transaction fees but save 10-20% in total cost via efficiency.

Q: "How do we evaluate vendors?"

Key criteria: geographic coverage (can they pay participants wherever you recruit?), compliance (automatic tax forms, fraud prevention, audit trails?), integration (works with your research tools or requires manual work?), participant experience (how fast do people get paid? how many options?), and support (what happens when payments fail or participants have issues?).

Immediate actions: Audit your current incentive practices against market benchmarks. Calculate time-to-fill and no-show rates for last 10 studies. If metrics are off, test higher incentives on next 3 studies. Document results to build business case for budget increase.

This quarter: Create internal rate card using the template above. Get approval for annual incentive budget. Explore Great Question's incentive features or request a demo.

Questions to ask your research team: What's our average time-to-fill for studies? What's our no-show rate? How do our incentive rates compare to market benchmarks? What would additional incentive budget unlock?

Investment decision: Current research budget is $X (salaries, tools). Proposed incentive budget is $Y (typically 20-30% of total research spend). Expected return includes faster insights, better quality, and more representative sample. Risk of not investing includes biased insights, delayed insights, and wasted researcher time.

Compliance checklist:

Vendor evaluation: Request demo of Great Question, review incentive management capabilities, check integration with Tremendous, calculate total cost of ownership including researcher time saved, and review pricing.

Budget planning: Incentive face value is $50-75/participant average. Platform transaction fees are ~3%. Estimate expected annual volume in participants. Total annual cost = participants × $75 × 1.03.

Great Question is the research operations platform that helps teams recruit participants, conduct studies, manage incentives, and organize insights—all in one place.

Our integrated incentive solution (powered by Tremendous) handles everything from payment delivery to tax compliance, so your researchers can focus on insights instead of logistics.

Ready to streamline your research incentives?

Last updated: January 2026

Questions? Contact us at research@greatquestion.co or talk to your customer success manager.

Ned is the co-founder and CEO of Great Question. He has been a technology entrepreneur for over a decade and after three successful exits, he’s founded his biggest passion project to date, focused on customer research. With Great Question he helps product, design and research teams better understand their customers and build something people want.