This is Part 2 of our 5-part series on AI in UX research. If you missed it, check out Part 1 for a comprehensive overview of AI applications across the entire research workflow.

In Part 1, we surveyed the entire landscape of AI in UX research, from planning through knowledge management. We covered how AI can help you write better research plans (faster), scale personalization in recruiting, and even moderate interviews. Now it's time to roll up our sleeves and dive deeper into each topic.

Synthesis and analysis are the most widely represented: 88% of the tools in our AI x UXR Tools Comparison say they support this functionality to some degree. And AI-moderated interviews are all the rage as of late. But there's a lot AI can do for you in terms of planning and recruiting. These early-stage use cases offer immediate time savings, compound across every project, and have relatively low risk if something goes wrong. More importantly, they're where most teams can start using AI confidently without needing to invest significant resources.

Whether you're ready to move beyond basic prompting or looking to build sophisticated, multi-step workflows, this deep dive will give you the frameworks and real-world examples you need to transform how you think about leveraging AI in your research practice.

(Before you dive in, make sure to swipe the template I created for building your own research planning assistant. More on this later.)

Beyond basics: Principles for building with AI

While Part 1 introduced AI use cases at a high level, we need to cover some fundamental principles before we go further. These aren't just technical considerations; they'll help you actually save time and boost productivity sooner while also building your own understanding of how to work with AI.

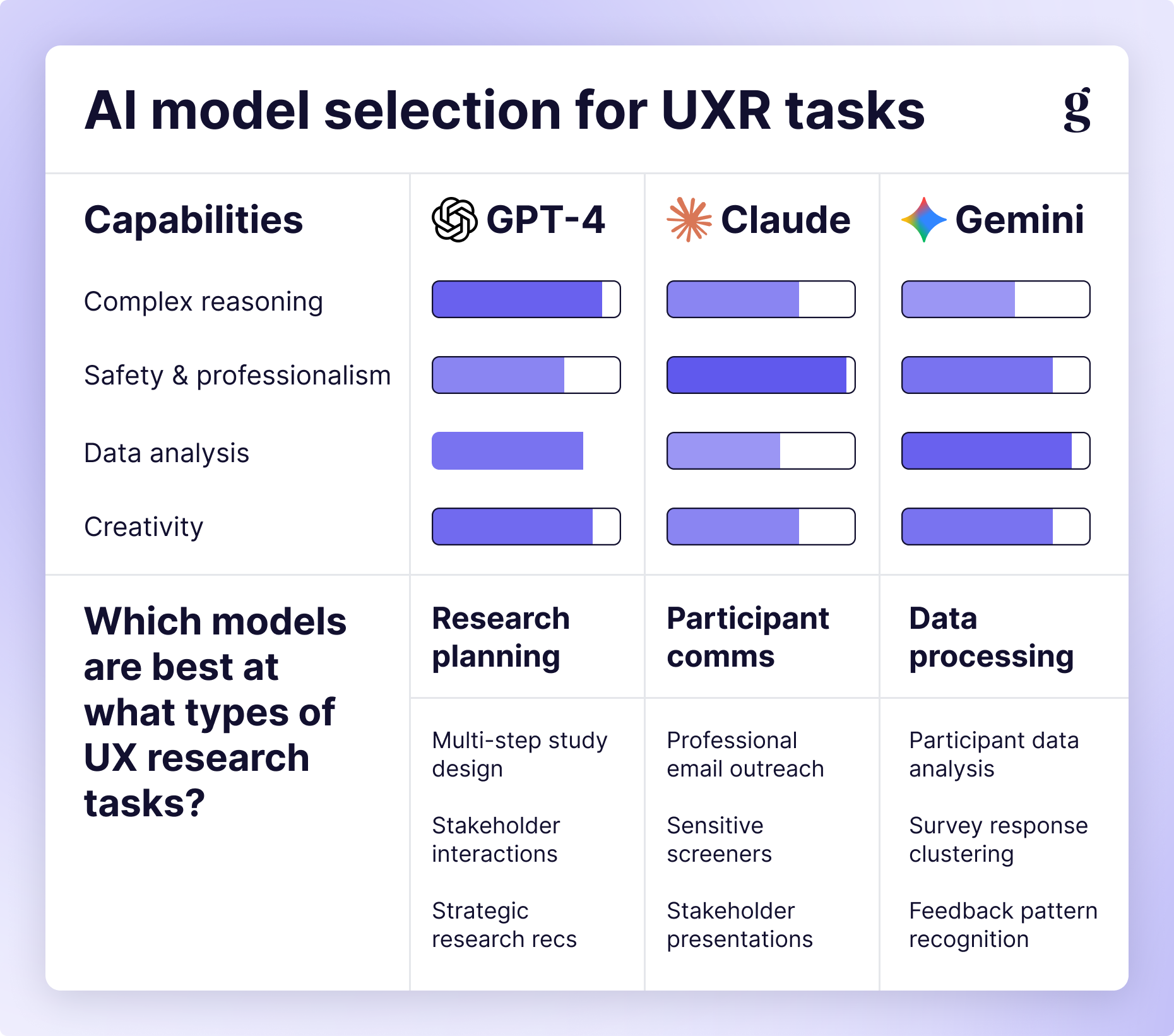

Selecting the right model for the job

In Part 1, we mentioned using "your favorite LLM" for various tasks. This is fine for personal use, but the more you expand the footprint of what AI works on (and who within the company uses the tools you build), the more important model selection becomes. Each LLM has its own personality, strengths, and weaknesses, e.g.

- GPT-4* excels at complex reasoning and maintaining context over long conversations, making it ideal for research planning where you need to juggle multiple variables and stakeholder constraints.

- Claude tends to be more methodical and safety-conscious—valuable when generating participant outreach that needs to be professional and appropriate.

- Gemini often performs well with data analysis and pattern recognition tasks.

You'll want to make sure you match the tool to the specific task, not just your personal preference. For brainstorming and ideation, you want creativity and expansiveness. For screening criteria and outreach emails, you need precision and reliability. Whenever possible, play around with different models and prompts; even the difference between Sonnet vs. Opus or 2.5 Flash vs. 2.5 Pro can have a drastic impact.

*Note: Based on the rocky release of GPT-5, we’re continuing to use GPT-4 in our testing and analysis until GPT-5 stabilizes. Hopefully things improve soon. 🤞

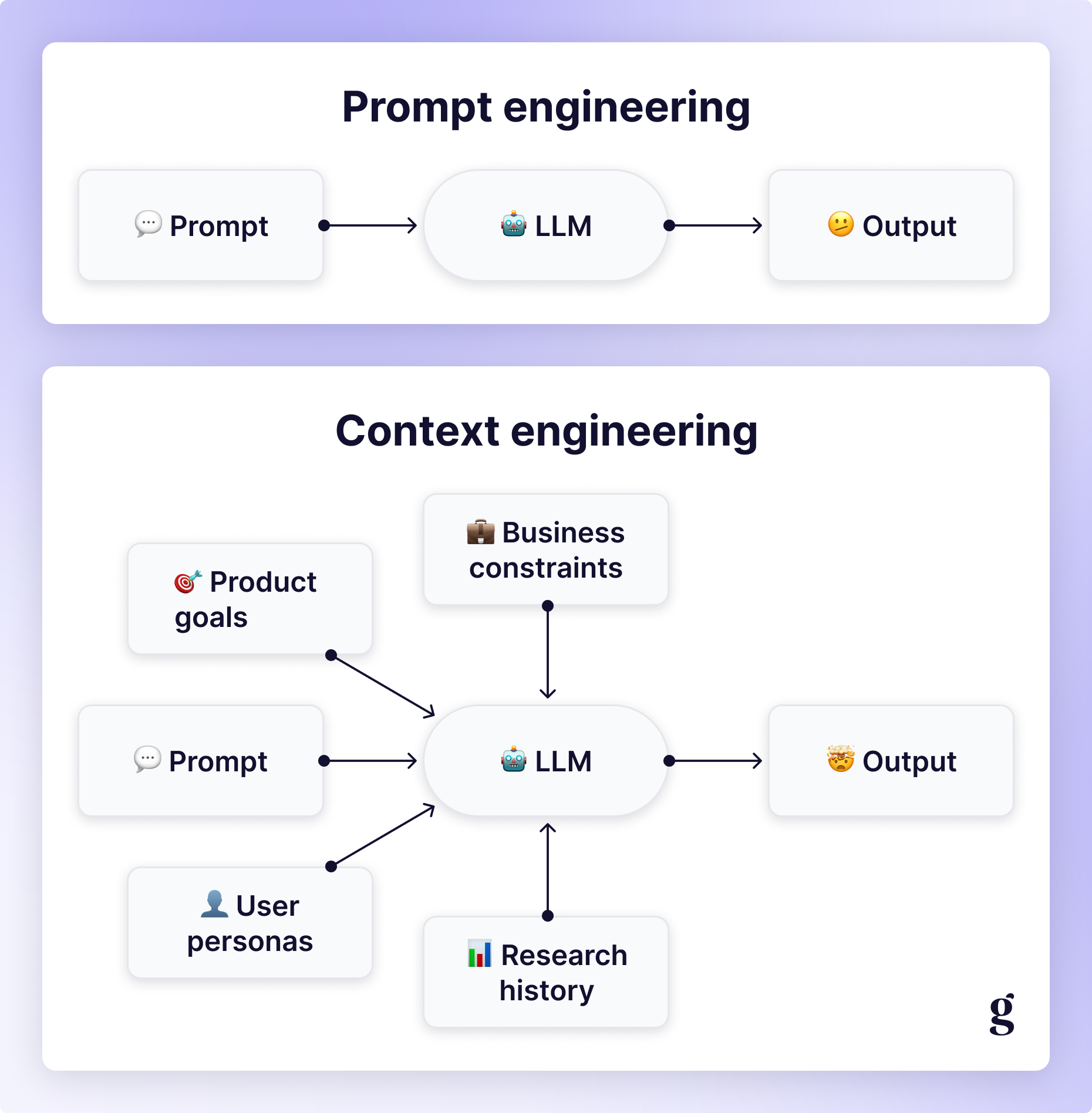

Context engineering vs. prompt engineering, explained

Until recently, most AI discussions have focused on crafting the perfect text input, often called prompt engineering. As we all get better at interacting with AI, it’s become clear context engineering matters more. This means systematically providing background information without overwhelm or dilution: product goals, audience details, previous research findings, business constraints, even your team's methodological preferences.

A well-contextualized AI can provide significantly better outputs than even the most cleverly prompted one operating in a vacuum. Think of it as the difference between briefing an external freelance contractor versus working with an internal team member who understands your product, users, and organizational dynamics.

Taking an iterative approach

AI rarely gets it right on the first try and, for our purposes, that can be a feature, not a bug. Getting from AI slop to something useful requires iteration, both in the prompts you use to train as well as what you put into the trained models (which is important for your PWDR to understand if you intend to use AI to support democratization). Start broad, evaluate outputs, then refine and build upon them. This mirrors Research and ResearchOps practices anyway: we rarely nail things on the first pass.

The more you practice the better you’ll get, but it can still take weeks or months of iteration to get a prompt finely tuned. Unless you’re building something solely for yourself, get some of your colleagues involved as well; we actually ran internal usability tests on our Research Planning Assistant at Webflow to understand what the expectations were of our PMs and Designers. Make sure you take time to evaluate the output as well as stress-test it with edge cases, but don’t hyperfixate on endlessly tweaking your prompts. Perfect is the enemy of good.

Understanding nondeterminism

AI's variability can be frustrating when you want consistent results, but it’s not only antithetical to how AI works; it can actually be beneficial for things like research. This nondeterminism helps you explore different angles, consider alternative approaches, and avoid getting stuck in familiar patterns. When generating research plans, sample questions, or recruitment emails, you run similar prompts multiple times to see different results and explore what’s possible.

Pro tip: Most LLMs have a “temperature” setting you can set to control how creative the output is.

You might be worried that this means anyone can shake the Magic 8 Ball until they get the answers they want. What’s important here is knowing when you want consistency (final deliverables) versus when you want exploration (early ideation). This is a big part of why I don’t foresee AI fully replacing human expertise anytime soon. Half of being successful with AI is knowing what quality input looks like; the other half is being able to determine what quality output looks like. As always: Garbage in, garbage out.

Trust vs. risk: A framework for AI implementation

A helpful framework for deciding when and how to use AI is to weigh the trust you have in the system against the risk of the task.

- Low-risk tasks: Brainstorming interview questions, summarizing your own notes, drafting an initial research plan for internal review. These are great candidates for high automation and trust. If the AI gets it wrong, the consequences are minimal.

- High-risk tasks: Sending external communication to participants, synthesizing raw data into final insights, making recruiting decisions based on PII. These tasks require significant human oversight. The reputational and ethical risks are too high to delegate completely.

Ethical considerations for AI in UX research

As researchers, we have critical ethical considerations to weigh when using AI in our work. We’re nowhere near reaching consensus or best practices, but here are a few things to consider as you roll out AI, especially if you’re working with participant data.

- Radical transparency: Participants should know where and how you’re using AI, and when it comes to anything they (or their data) are interacting with (e.g. outreach/scheduling emails, AI moderation, analysis & synthesis), they should have the ability to opt out. Here are some best practices you should consider, especially in the context of GDPR.

- Tool selection: Only use AI tools approved by your organization with appropriate privacy agreements. This is especially critical with PII: you don’t want to upload participant (or proprietary) data to a consumer AI tool. When in doubt, use mock data for testing and get explicit approval before using it for real.

- Human oversight: Establish clear protocols for when humans must review AI outputs. I recommend human review/approval for things like high-stakes recruiting (e.g. large enterprise customers), sensitive screening decisions, and any content that represents your organization's voice.

Pro tip: Because Great Question AI uses GPT Enterprise, data containing PII is automatically masked, and data is never used by third parties for training future models. Learn more here.

Building your own research planning assistant

Overcoming the dreaded “blank page problem” is AI 101. You can develop a full-blown Research Assistant leveraging AI to help you write research plans, consider different approaches or perspectives, and pressure-test your ideas. Rather than starting fresh with each project, train an LLM (Custom GPT, Gem, Project, etc.) on your organization's context. Alongside your prompt, consider feeding in:

- 5-10 of your favorite research plans as examples

- Your product's key user segments and personas

- Common stakeholder questions and business priorities

- Your team's methodological strengths and constraints

- Typical timeline and resource limitations

This foundational knowledge enables your AI assistant to provide recommendations tailored to your team and business rather than generic textbook advice. If you don’t already have one, this is an excellent opportunity to create a research plan template as well.

Your AI sparring partner

The next level beyond asking AI to write a research plan is to collaborate with it to make yours better. Leveraging AI as a sparring partner lets you consider alternative methods or audiences, play with different question wording, or even help make contingency plans in case things don’t go perfectly (but that never happens, right? 😉).

The goal isn’t necessarily to get a definitive answer so much as it’s to pressure-test your ideas and ensure you’re considering all options. It’s not a replacement for human critique, but if you can run through 10 different scenarios before presenting to your colleagues, why wouldn’t you?

A few ideas of things you could ask your assistant to do:

- Compare methodological tradeoffs

- Suggest participant samples or screening criteria

- Identify potential biases in your approach

- Flag timeline risks and suggest mitigation strategies

- Emulate feedback from different stakeholders

- Determine the best way to communicate findings based on your audience

- Ensure research goals are aligned with business goals

- Prepare to overcome potential objections from stakeholders

Advanced question generation

Rapid iteration is one of AI’s greatest strengths. In Part 1, we mentioned generating questions from different perspectives. Here are two ways to make this more systematic and go deeper than just playing 20 Questions with AI:

Layered question generation

- Start with your core research objectives

- Generate initial questions focused on those objectives

- Ask AI to create follow-up questions for each primary question

- Generate questions from different stakeholder perspectives (PM, designer, engineer)

- Create questions that probe for emotional/motivational factors

- Develop questions that explore edge cases and failure modes

Question quality assessment

Ask your AI assistant to evaluate a set of questions for potential issues:

- Leading or biased language

- Assumptions about user behavior or preferences

- Technical jargon that might confuse participants

- Questions that are too broad or too narrow

- Missing areas that should be explored

Caveats & pitfalls

One of the biggest challenges you’ll face is managing context. Unless you’re using Glean or some other tool directly integrated with your company’s knowledge base, providing the appropriate amount of context will be a challenge. Not enough context and your answers are too generic; too much context and the agent might get bogged down in details or have a hard time following the conversation (especially if there’s a lot of back-and-forth). Most LLMs aren’t particularly good at noticing or admitting they’ve forgotten, so be extra careful around that.

{{ned-dwyer-ai-uxr-planning-recruiting="/about/components"}}

Recruiting & scheduling automations

Part 1 introduced ways to scale personalization and outreach. Let’s take a closer look at how we can improve screening, ease scheduling, and build toward an end-to-end tool.

Better participant selection

The lowest-hanging fruit when it comes to participant selection is moving beyond checkboxes. Instead of setting filters on a spreadsheet or participant database, you can simply give (your company-approved, enterprise-grade) AI your research plan and ask it to pick participants that would be a good fit. Assuming you have enough metadata (which…might be a large assumption 😅), it’s trivial to get 20 candidates back. In that very same prompt, you can also generate outreach emails, personalized to your study and each participant. From there, you could copy/paste—or a little integration magic could send those emails on your behalf as well. ✨

Pro tip: Use AI to test different messaging. Low response from enterprise users? Make sure you’re speaking to the pain points of large orgs, not small businesses or early-stage startups. For global research, AI can help adapt not just language but communication style, formality level, and cultural context based on participant location and profile.

Spreadsheets are cool, but what if you could have AI proactively suggest participants? You’d need to plug in some more data (e.g. your CRM, a product analytics stream, previous survey responses), but next-level recruiting is where your AI assistant can identify patterns across your entire user base that might make them a good candidate. What if your tireless junior researcher was also a data analyst, building custom candidate pools for every study?

Intelligent calendar management

Tools like Doodle and Calendly revolutionized planning and calendaring, and nowadays most participant management tools have a scheduling feature built in. In many situations, this is good enough. However, AI can enhance the experience by providing a native interaction. Instead of forcing participants to open a separate (potentially unfamiliar) app/website, your AI assistant can suggest times that work for you, or request times from them using natural language. Now all they have to do is reply to the email and your agent can automatically generate calendar invites with relevant links, create placeholder documents for notes, and do any technical setup needed.

An AI assistant can also be more flexible with scheduling, understanding the difference between a 1:1 meeting (that you can probably move) vs. a team- or company-wide event you can’t miss.

End-to-end workflow orchestration

The ultimate goal is an AI system that manages the entire recruitment workflow while maintaining quality and personal touch. This won’t happen overnight, and will require a lot of effort to both fine-tune the agents and integrate all of the tools to make it possible. Here’s what a phased approach might look like:

Phase 1: Assisted decision-making

- AI suggests screening criteria but human finalizes

- AI recommends participants but human approves

- AI drafts emails but human reviews before sending

Phase 2: Automated execution with human oversight

- AI executes approved workflows automatically

- AI provides regular status updates and flags issues

- Human reviews edge cases and exceptions

Phase 3: Intelligent autonomous operation

- AI manages routine recruitment independently

- Automatic escalation for complex situations

- Human involvement only for strategic decisions and problem-solving

As you work through those phases, there are a few things to keep in mind:

Start with email templates, not full automation. Before building complex workflows, get comfortable with your AI-generated email templates.

- Test different approaches, measure response rates, and build a library of proven messaging that your automation can draw from.

- Spoiler alert: your ReOps team should be doing this already.

Implement intelligent fallbacks. Just like a good junior teammate, your agents need to know when to ask for help.

- What happens when AI can't categorize a response? When someone asks a question not covered in your FAQ? When scheduling gets complicated? What’s the procedure if your agent hallucinates something?

- You need to build clear escalation paths and fallback procedures into your agents. By default, most LLMs will either hallucinate or lie, so it’s helpful to a) explicitly tell them not to do that when building your prompt/context, and b) give them explicit instructions on what to do.

- For example, you’ll need to calibrate how much confidence interval the agent needs to be effective, but you can tell it “If you’re less than X% confident, notify a human”. This article covers it nicely (don’t let the financial services context scare you).

Caveats & pitfalls

This is where the principles of Trust vs. Risk are critical. In a recent experiment, I asked an LLM to identify candidates and draft emails. It missed a key screening criterion, hallucinated the incentive amount ($50 instead of $40), and produced generic, repetitive emails. Human oversight is absolutely essential until you’ve fine-tuned your prompts and models.

Implementing AI in your research workflow

You might be thinking that this all sounds great but who has the bandwidth or expertise to build any of this? The short answer is: you do. Everyone does. The question isn’t if you can, so much as what level of fidelity and technical complexity can you build to. You can do some of this with out-of-the-box LLMs and derive some value, but like most things in life, you can get a lot more out of it by putting some effort in.

Low-to-no effort/complexity

Use consumer-grade LLMs for one-off tasks (e.g. ask it for help generating survey questions). For example:

- Ask ChatGPT to generate 10 different ways to ask about a specific user pain point.

- Have Gemini draft an initial interview guide based on a project brief you paste in.

- Use Claude to rephrase a list of screener questions to be less leading.

Give it more complex requests, e.g. "Generate five open-ended questions to understand how small business owners manage their finances, based on the following context: [insert product goals]."

Medium effort/complexity

Train custom models for a variety of common tasks in your workflow. Training a custom model involves creating a 'knowledge base' with your organization's unique context.

- Start by creating a document with your team’s research plan templates, user personas, common stakeholder questions, and previous research findings.

- Upload this document or paste the text directly into the custom LLM's setup.

- The time you invest here pays dividends on every future project, as your AI assistant becomes more and more tailored to your team's specific needs and voice.

High complexity (future state)

Use code and third-party integrations to build fully automated end-to-end solutions for research planning, recruiting, outreach, follow-ups, etc.

Toward the future

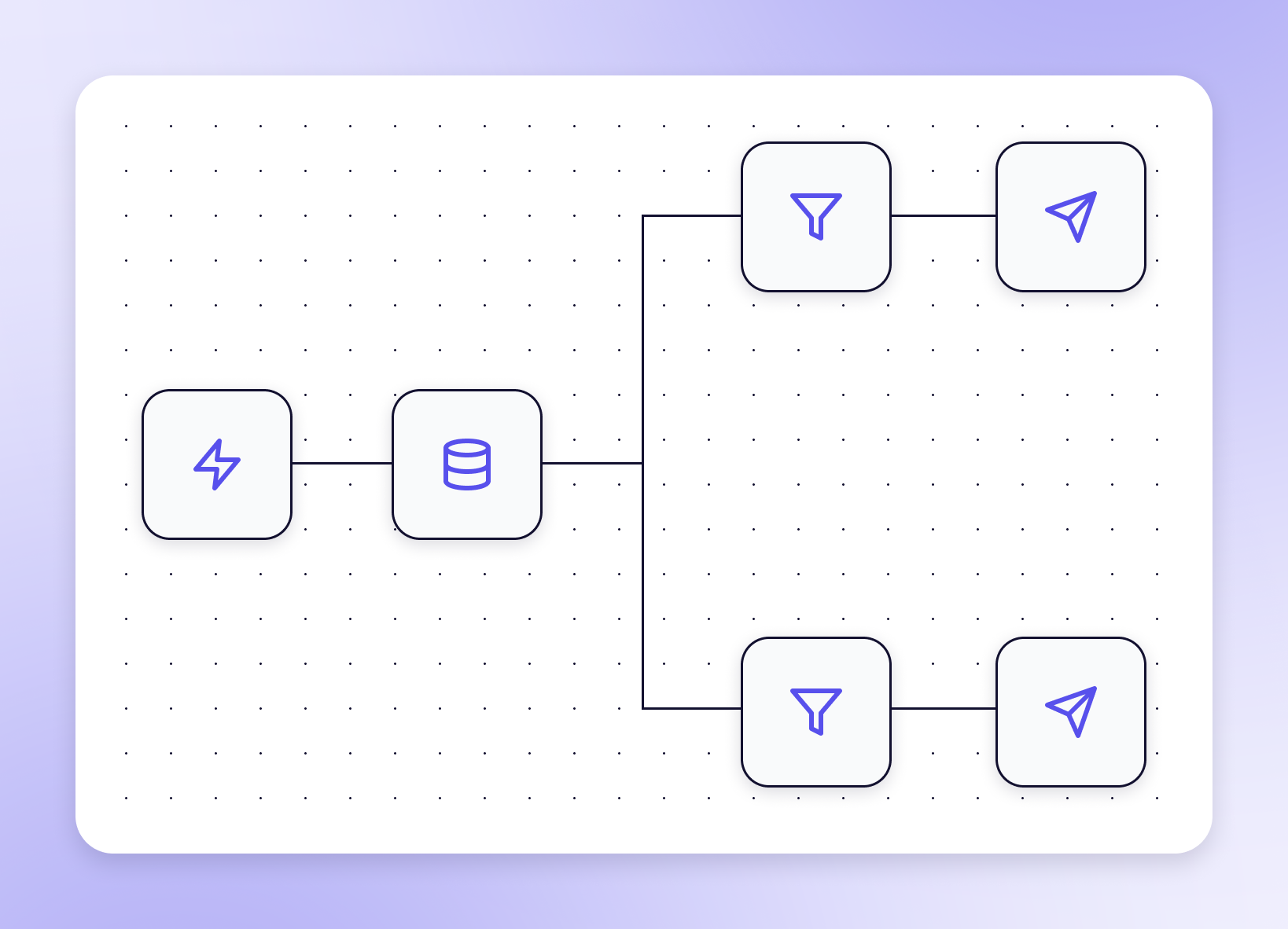

Next, let's map out what an advanced AI-enabled workflow might look like. Some of this is not currently possible, but with a little assistance from some of the vendors in the space (e.g. via enabling an MCP server) we’re not far off. This workflow would likely involve some amount of custom code, or at the very least if someone intends to productize it, they’ll want to give users the ability to customize to fit their use case, brand tone, etc.

Tool connections needed

- Knowledge base/document storage (e.g. Confluence, Google Docs, Notion)

- Project management tool (e.g. Jira, Trello, Monday)

- Product analytics (e.g. Heap, Mixpanel, Hotjar)

- Participant management (e.g. Great Question, User Interviews)

Workflow

- Whenever a new project is created that needs research, a Research Orchestrator agent automatically kicks off a process (using the Research Planning Assistant) to write a research plan proposal based on the objectives, scope, and key questions associated with that project.

- A Researcher reviews the research plan, makes any edits needed, and finalizes the plan with the Orchestrator.

- The Orchestrator, using the recruiting criteria in the plan, runs a few queries of product analytics to identify any patterns of use that might be relevant, sending contact info (if your privacy policy allows) to your participant management tool’s agent.

- Your participant management tool enforces any compliance and eligibility rules you’ve set, creating a user segment and sample project, drafting personalized emails for each candidate based on their past research experience, their product usage patterns, etc.

- A Researcher reviews the candidate pool and the emails to ensure no hallucinations have crept in, and if all looks good, provides the go-ahead to the Orchestrator to begin recruitment.

- The Orchestrator works alongside the Recruiter to batch invites, monitoring sign-up rates and Researcher availability to ensure they don’t overbook sessions.

- The Researcher shows up to the sessions without having to raise a finger.

What a dream, eh? Instead of thinking about one monolithic Research AI, start to think about breaking things down into smaller parts and building multi-agent systems. Trying to build one agent to do all of this sounds crazy, but if you have an agent specialized at writing plans, one that specializes in recruiting, one for data gathering, one for analysis and synthesis, one for knowledge management…this becomes less science fiction and closer to reality.

Measuring the success of AI implementation

As you implement any AI-enhanced workflows, you should establish performance metrics. This will help you understand what’s driving real improvement as well as justify further investment into these projects. Are you actually increasing efficiency and driving greater impact? Or is this just the latest edition of Research Theater?

{{noam-segal-ai-uxr-planning-recruiting="/about/components"}}

Here are some ideas to help get you started:

Efficiency metrics

- Time from research request to study launch

- Hours spent on recruitment per study

- Email open rates and response rates

- No-show rates and cancellation patterns

Quality metrics

- Participant satisfaction scores

- Data quality assessments

- Stakeholder feedback on research plans

- Researcher confidence in AI recommendations

Strategic metrics

- Increase in research throughput

- Expansion into new user segments or methodologies

- Stakeholder self-service capabilities

- Researcher time allocated to strategic vs. tactical work

What's next in this series

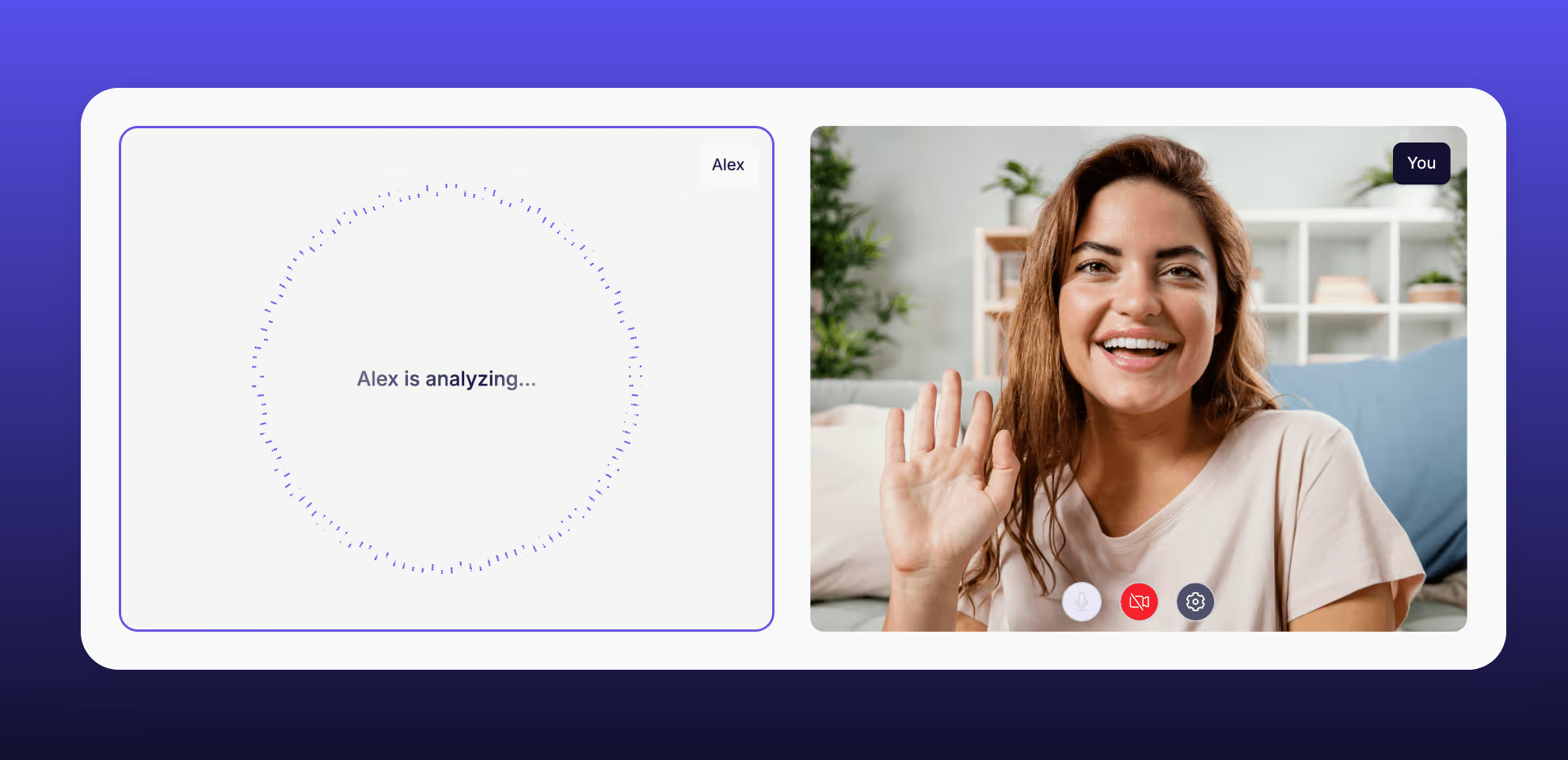

This tactical deep dive into planning and recruiting sets the foundation for more advanced AI applications. In the coming weeks, we'll continue to explore how AI is impacting data collection (namely AI moderation), analysis and synthesis, and knowledge management. Each post builds on what we've established here, moving from tactical efficiency gains to strategic organizational capabilities.

The future of research isn't about replacing human insight with artificial intelligence—it's about using AI to handle routine work so researchers can focus on strategic thinking, relationship building, and insight synthesis that actually move organizations forward. By mastering planning and recruiting automation, you're building the foundation for that future.

Ready to get started?

- Download the AI x UXR Tool Comparison Guide (featured in Part 1)

- Download the AI Research Planning Assistant Template (featured here in Part 2)

- Sign up to get notified when new AI guides & resources go live

And don't miss Part 3, where we'll explore how AI is changing the way we collect data and interact with participants.

Have you experimented with AI in your research planning or recruiting? I'd love to hear what's working (and what isn't). Reach out on LinkedIn or email me with your experiences.

.avif)